Introducing Metropolis AI Workflows & Microservices 1.0: Revolutionizing Multi-Camera Tracking

Published:

The field of computer vision has been rapidly advancing in recent years, thanks to the emergence of cutting-edge technology such as deep learning and artificial intelligence. One of the most exciting applications of these technologies is in the development of smart video analytics systems that can track and analyze people’s movements across multiple cameras in real-time.

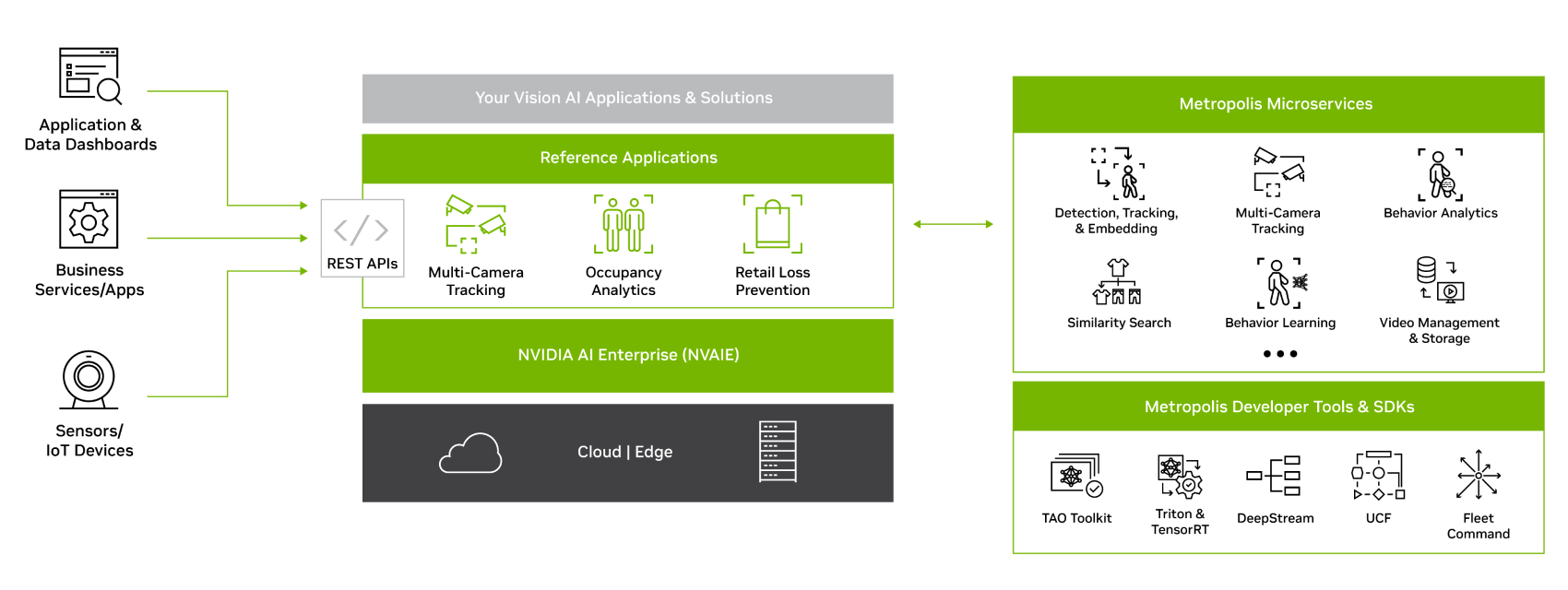

And now, we’ve hit an exciting new milestone in driving vision AI adoption. The Metropolis AI Workflows & Microservices 1.0 is officially live and is set to revolutionize the way enterprises and our ecosystem approach centralized perception across an array of matrixed sensors.

One of the most exciting features of this release is the Multi-Camera Tracking app, which I had the pleasure of developing. The app is a reference architecture for video analytics applications that tracks people across multiple cameras and provides the counts of unique people seen over time. This is also known as Multi-Target Multi-Camera (MTMC) tracking.

With Multi-Camera Tracking, developers can extend their tracking capabilities to other camera views, including outdoor scenarios. The app uses live camera feeds as input and applies object detection, object tracking, streaming analytics, and multi-target multi-camera tracking in real-time. It then provides various aggregated analytics functions as API endpoints and visualizes the results via a browser-based user interface.

My role in developing the Multi-Camera Tracking microservice involved consuming raw data, which was then processed into behaviors such as trajectories, embeddings, and so on. The behaviors were then clustered based on re-identification feature embeddings with spatio-temporal constraints. This allowed us to accurately track people across multiple cameras, even in complex scenarios.

The Metropolis AI Workflows & Microservices 1.0 also includes several other exciting features, such as:

- Four fully customizable, cloud-native reference applications: Multi-Camera Tracking, Occupancy Analytics, Retail Loss Prevention, and Occupancy Heatmap

- A suite of microservices to provide advanced detection, tracking, and analytics of people movements and behaviors across multiple cameras, as well as few-shot learning capabilities for retail object detection

- REST API and other industry standards such as Kafka and Elasticsearch to query and extract metadata, enabling seamless feature expansion and/or integration with existing applications

- Easy deployability on any cloud or enterprise edge with NVAIE

- A browser-based toolkit for camera calibration, mapping between camera space and physical space

- Web UIs and Kibana dashboards for easy visualization of raw and processed data

- Jupyter Notebooks to illustrate the use of our microservices and API in programmatic manners.

Our customers are already excited about this release, with over 300 organizations signing up for Early Access. And with the Multi-Camera Tracking app at the forefront of this exciting new technology, we’re confident that we’ll continue to drive innovation and push the boundaries of what’s possible in computer vision and AI.

In conclusion, the Metropolis AI Workflows & Microservices 1.0 is an exciting new development that’s set to revolutionize the world of computer vision and smart video analytics. With the Multi-Camera Tracking app leading the charge, we’re excited to see what the future holds for this groundbreaking technology.